Project supervisors: Anadi Chaman and Ivan Dokmanić

Multi-scale convolutional dictionary learning has been used in recent years to perform various image reconstruction tasks [1,2]. These dictionaries are used to generate sparse representations for images which are then processed at various scales to generate the final reconstructions.

A key advantage of this class of approaches is that it is interpretable from a signal processing point of view while providing performance close to popular deep neural network architectures like U-Net. One example for this is the recently proposed ISTA U-Net [2] that uses a linear convolutional dictionary similar to the U-Net, but can provide state-of-the-art reconstruction performance on tasks like super-resolution, de-raining, MRI reconstruction, etc.

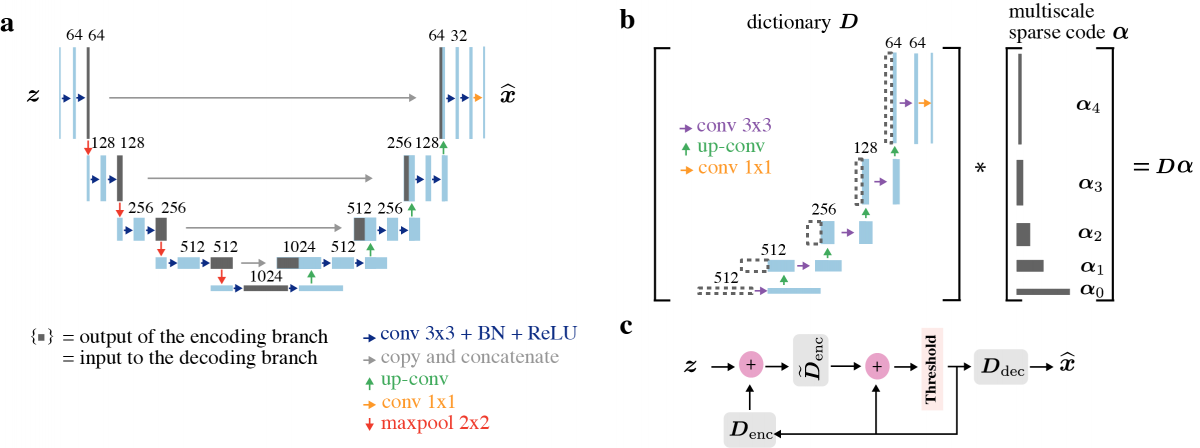

Figure 1: Schematic illustration of the classic U-Net (left panel) and the dictionary model of the ISTA U-Net.

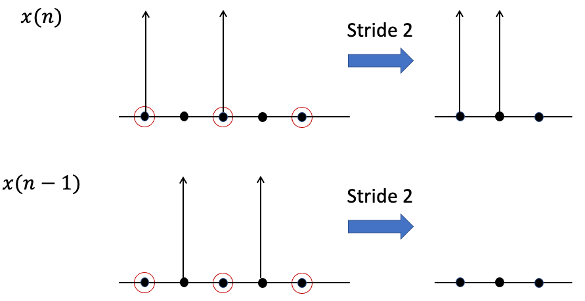

While multi-scale representations are essential for effectively processing natural images, they can cause the final reconstructions to be unstable to small shifts. This is because of the use of stride operations which are not shift equivariant. Figure 2 shows an example of how the result of downsampling (stride) can be unstable to small shifts.

Figure 2: Downsampling a signal and its shifted version can have significantly different outputs.

Project proposal

Chaman and Dokmanić proposed new stride layers called adaptive polyphase down/upsampling (APS-D/U) to restore perfect shift invariance in CNN classifiers [3] and shift-equivariance in U-Nets [4] respectively. The gains in robustness to shifts were obtained without any loss in network performance or any additional learnable parameters.

While the lack of shift equivariance in U-Nets and CNN classifiers has been well studied, it has not been quantified in the multi-scale convolutional dictionary learning setting. In this project, we aim at the following goals.

- We would like to explore how popular dictionary learning methods (especially ISTA U-Net) fare against small shifts in their input. In particular, we would like to compare their performance to adverserial shifts against U-Nets.

- We aim to extend the use of APS layers in ISTA U-Net to restore perfect shift equivariance while making sure it does not result in any loss in performance.

Pre-requisites

The student should be proficient in training deep learning models in Python/PyTorch/Tensorflow. Background knowledge in signal processing (while not necessary) would be helpful.

Interested students are requested to contact Anadi Chaman.

References

[1] T. Liu, A. Chaman, D. Belius and I. Dokmanić, "Learning Multiscale Convolutional Dictionaries for Image Reconstruction," in IEEE Transactions on Computational Imaging, vol. 8, pp. 425-437, 2022, doi: 10.1109/TCI.2022.3175309.

[2] M. Li et al., "Video Rain Streak Removal by Multiscale Convolutional Sparse Coding," 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 6644-6653, doi: 10.1109/CVPR.2018.00695.

[3] A. Chaman and I. Dokmanić, "Truly shift-invariant convolutional neural networks," 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 3772-3782, doi: 10.1109/CVPR46437.2021.00377.

[4] A. Chaman and I. Dokmanić, "Truly Shift-Equivariant Convolutional Neural Networks with Adaptive Polyphase Upsampling," 2021 55th Asilomar Conference on Signals, Systems, and Computers, 2021, pp. 1113-1120, doi: 10.1109/IEEECONF53345.2021.9723377.