Despite the presence of convolutions, convolutional neural networks can be highly unstable to shifts in their input due to downsampling operations (typically in the form of strided convolutions and pooling layers). This results in the loss of shift-invariance in CNN classifiers and shift-equivariance in networks like U-Nets used for image-to-image regression tasks.

We address this challenge by proposing novel stride layers: adaptive polyphase downsampling (APS-D) and upsampling (APS-U). APS-D allows CNN classifiers to achieve 100% consistency in classification performance under shifts, without any loss in accuracy, whereas APS-U enables perfect shift equivariance in symmetric encoder-decoder based CNNs on image reconstruction tasks. With our proposed method, CNNs exhibit perfect shift invariance even before training, thus making them the first approach that enables truly shift invariant convolutional neural networks.

Github: https://github.com/achaman2/truly_shift_invariant_cnns

Project video: https://www.youtube.com/watch?v=l2jDxeaSwTs

Adaptive Polyphase Downampling (APS-D)

One of the primary reasons CNNs lose robustness to shifts is the use of downsampling (popularly known as stride) layers in the networks.

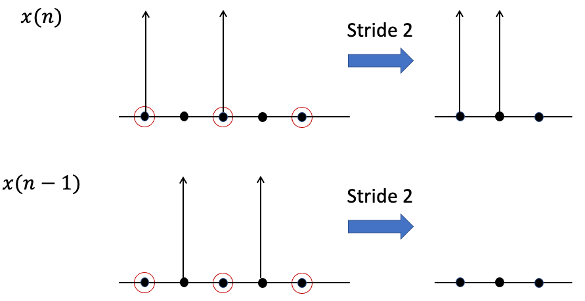

Figure: Conventional linear downsampling on unshifted and shifted signal.

Downsampling an image and its shift can result in significantly different outputs. The sensitivity of this operation to shifts results in loss in robustness of CNNs to translations. Note that strided pooling layers like averaged or max-pooling can be expressed in the form of downsampling which explains their instability to shifts as well.

While existing methods like data augmentation and anti-aliasing help improve robustness to shifts, they both have limitations and can not result in complete shift invariance.

In this work, we present Adaptive Polyphase Sampling (APS), an easy-to-implement non-linear downsampling scheme that completely gets rid of this problem. The resulting CNNs yield 100% consistency in classification performance under shifts without any loss in accuracy. In fact, unlike prior works, the networks exhibit perfect consistency even before training, making it the first approach that makes CNNs truly shift invariant.

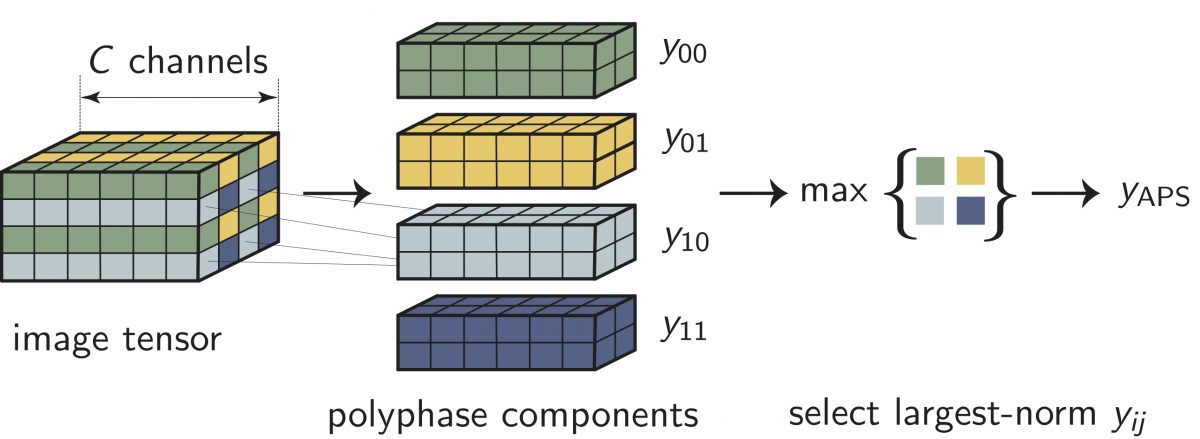

Unlike classical subsampling methods that always sample an image along a fixed grid, APS chooses the sampling grid adaptively in a way to ensure consistency with shifts.

Figure: APS on multi-channel image tensor.

Publications

2021

@inproceedings{Chaman_2021_CVPR,

author = {Chaman, Anadi and Dokmani{\'c}, Ivan},

title = {Truly Shift-Invariant Convolutional Neural Networks},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

url = {https://openaccess.thecvf.com/content/CVPR2021/papers/Chaman_Truly_Shift-Invariant_Convolutional_Neural_Networks_CVPR_2021_paper.pdf},

pages = {3773-3783}

}@inproceedings{Chaman_2021_equivariant,

title={Truly shift-equivariant convolutional neural networks with adaptive polyphase upsampling},

author={Chaman, Anadi and Dokmani{\'c}, Ivan},

booktitle={55th Asilomar Conference on Signals, Systems, and Computers},

year={2021},

url = {https://ieeexplore.ieee.org/abstract/document/9723377/}

}