Encoder-decoder CNNs such as U-Nets have been tremendously successful in many imaging inverse problems. This project aims to understand the source of this success, by reducing the CNNs into tractable, well-understood modules while retaining their strong empirical performance.

Learning Multiscale Convolutional Dictionaries for Image Reconstruction

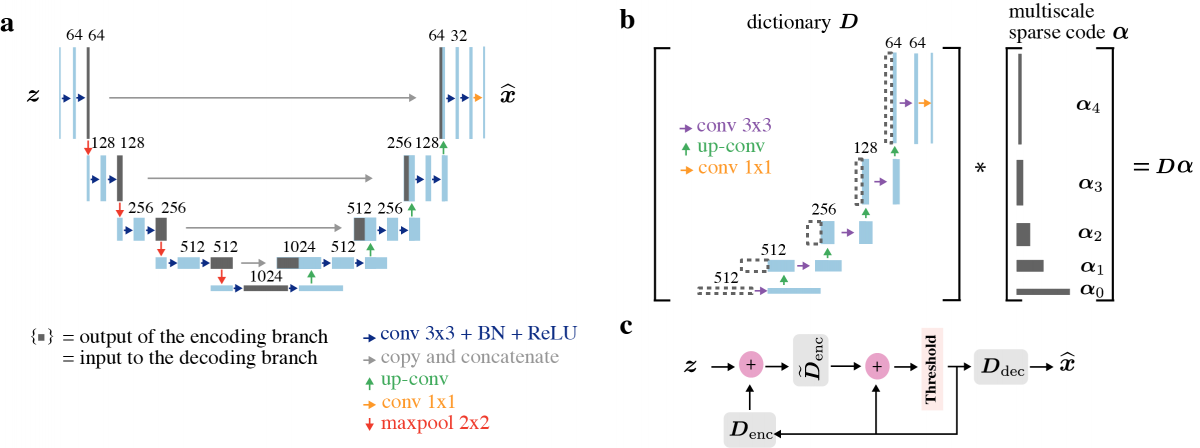

Crucial to our approach is the structure of our constructed multiscale dictionary (Figure 1): It takes inspiration from and closely follows the highly successful U-Net model.

Figure 1: Schematic illustration of the classic U-Net (left panel) and a dictionary model extracted from the U-Net (right panel).

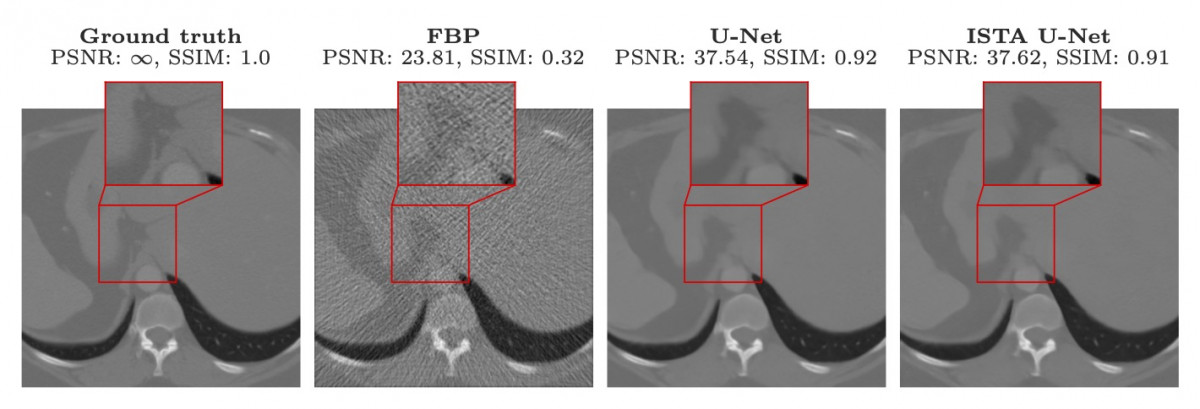

We show that the constructed multiscale dictionary performs on par with leading CNNs in major imaging inverse problems such as CT reconstruction (Figure 2).

Figure 2: Reconstructions of a test sample from the LoDoPaB-CT dataset.

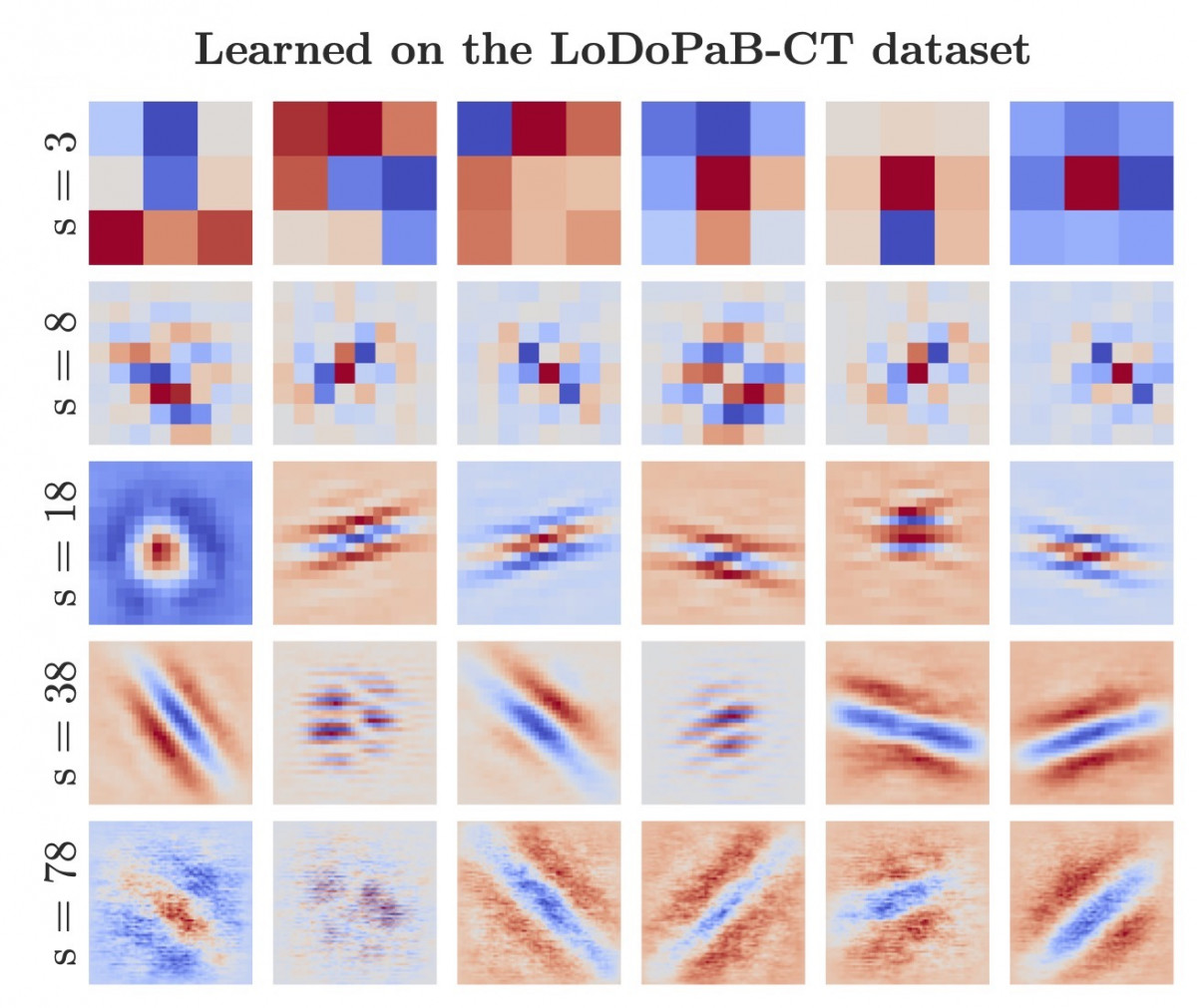

We also analyze our learned dictionaries and their induced sparse representations. We observe that the learned dictionaries contain Gabor-like or curvelet-like atoms with different spatial widths, resolutions, and orientations; furthermore, they indeed exploit multiscale features (Figure 3).

Figure 3: Atoms in a learned decoder dictionary based on the LoDoPab-CT dataset.

Overall, our work makes a step forward in closing the performance gap between end-to-end CNN and sparsity-driven dictionary models. At a meta level, it (re)validates the fundamental role of sparsity in representations of images and imaging operators.

Publications

2021

@misc{Liu2021learning,

Author = {Tianlin Liu and Anadi Chaman and David Belius and Ivan Dokmani{\'c}},

Title={Learning Multiscale Convolutional Dictionaries for Image Reconstruction},

Year = {2021},

Eprint = {arXiv:2011.12815},

}